Sponsored by: Sucuri: Incident Response, Monitoring, DDoS mitigation and WAF for websites

![Offshoring roulette: lessons from outsourcing to India, China and the Philippines]()

I've had this blog post in one form or another of draft for several years now. I hesitated to complete it, in part because at the best of times cultural observations can easily be misinterpreted and also in part because of the role I had in working with many outsourcing vendors across Asia. Whilst the former hesitation has in no way changed, the latter has and I think it's a genuinely interesting topic worth sharing, particularly before my outsourcing memories fade too far.

One thing before I begin: these are opinions based on personal observations. I'll qualify them wherever possible and articulate my experiences as far as I can but they remain just that - my experiences. I'll also be as culturally sensitive as I can but ultimately I'm still going to comment on cultural traits. It'll resonate with some people and it will upset others but I hope that amongst all that, there's some genuinely valuable insights.

Here's what I've learned over many years of outsourcing software projects to India, China and the Philippines.

Who am I to talk about offshoring / outsourcing / other cultures?

In what is now a former life, I spent a great deal of time sending work overseas. In fact, I spent quite a bit of time over there myself in some of the places I'm going to talk about. That former life was as a software architect for Pfizer and in the last half dozen years of my 14-year tenure, I had responsibility for software architecture in the Asia Pacific region. For those not from this corner of the world, Asia Pacific is a sizeable chunk of the globe:

![Offshoring roulette: lessons from outsourcing to India, China and the Philippines]()

The region covers everything from Pakistan in the west to obviously China, over to Japan and down to my corner of the world in Australia and New Zealand. This is a region which is home to more than half the world's population, 2.7 billion of whom are in China and India alone. The size, population and diversity is enormous.

Getting back to Pfizer, whilst I was originally brought on to build software, over the years the organisational strategy for the region changed until they reached a rather simple conclusion: outsource all the things. That was the standard practice and with this change, my role evolved to one of workings with vendors rather than working with developers. I got involved in projects ranging from basic brochureware websites to large clinical research systems, mobile apps to POS terminal implementations and all sorts of supporting initiatives such as iPad rollouts, organisation wide operating system and browser upgrades and a raft of other internal "enabling functions". It was broad.

Over the years, I worked on what must have totalled hundreds of projects with dozens of different vendors, particularly in India, China and the Philippines. It's those countries and indeed outsourcing observations in general I want to talk about here. Call it part of my post-corporate therapy, if you will :)

Just one other thing before I start talking about cultures as well: I lived most of my teenage years in Europe and Asia, 3 of them in Singapore while finishing high school there. I lived amongst, went to school with and spent a lot of time socially with the predominant 3 cultures that make up the island nation, namely Chinese, Indian and Malay. I chuckle these days when people suggest that because of my demographic I don't know what it's like to be in a minority group. Yeah, try being a 6' 5" blonde Caucasian in an intensely nationalistic small Asian nation and see how much you stick out compared to everyone else! Anyway, the point is that the observations I'm going to make in this post aren't those made by someone with only a traditional western background to compare experiences to, far from it.

Why outsource?

Let's start with the promise of outsourcing: People are expensive and you've gotta keep a lot of them on hand to build software products and then have them ready to support them. Whether they're working or not, you need to pay them and they may not even have the specific skills you need on each and every project which may mean going out and hiring even more people.

The promise of outsourcing is the human equivalent of elastic cloud scale; you use what you want when you need it and only pay for what you consume. A project comes up so you flick it to a vendor with the resources to do the job thus "unburdening" your own org of needing to keep those heads on staff.

Now to be clear, outsourcing alone doesn't mean sending it offshore, that's another dimension all together. Let's talk about the value proposition sending work to another country presents.

Why offshore?

The single biggest value proposition of offshoring is precisely the point this ad makes about labour costs:

![Offshoring roulette: lessons from outsourcing to India, China and the Philippines]()

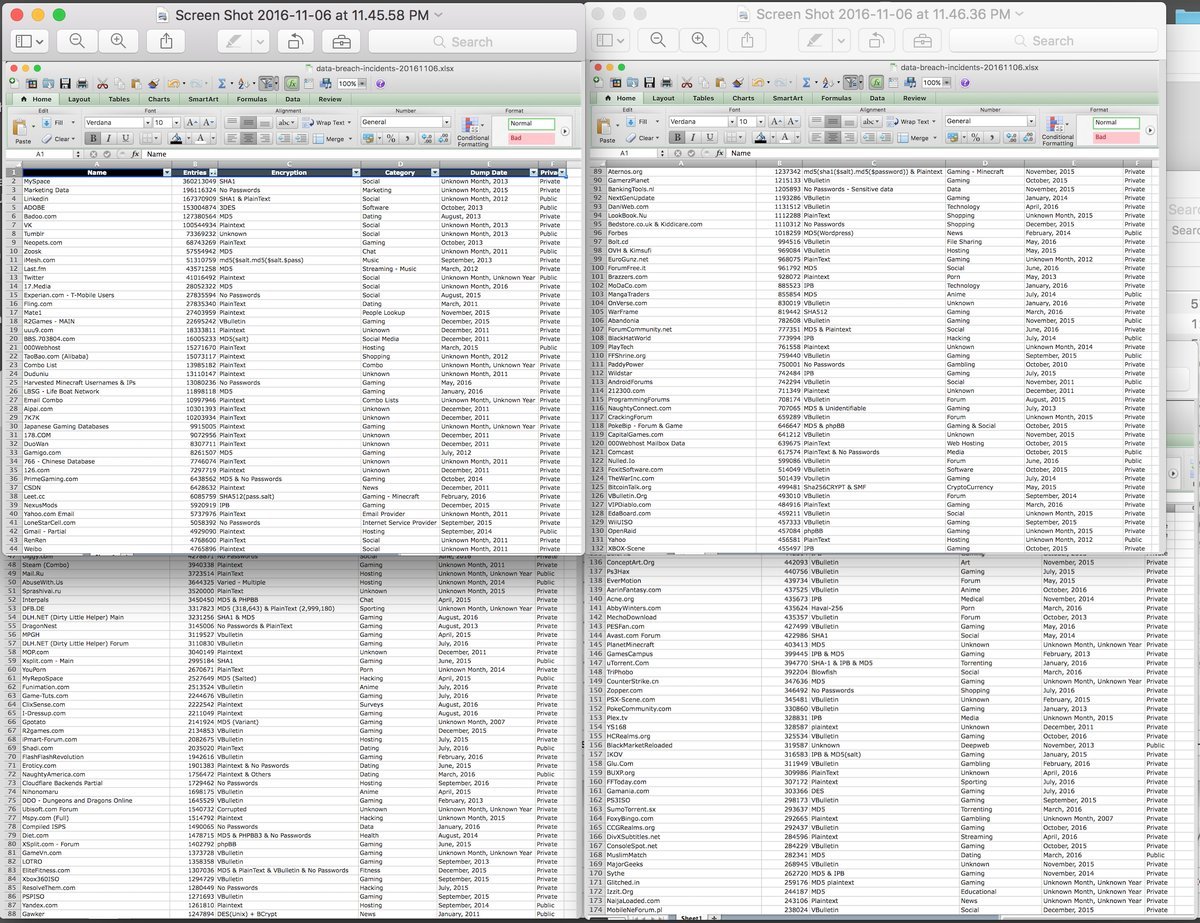

Australia is expensive. America is expensive. The UK is expensive. I pick those 3 because it's where the bulk of my readership comes from and if we look at the average wage by country (you'll always get different figures on this, I'm going by the World Bank's data), they all do exceptionally well compared to the rest of the world:

![Offshoring roulette: lessons from outsourcing to India, China and the Philippines]()

Now, much of the offshoring rationale says "Hey, why are we paying expensive developers in these countries when we could be paying cheap developers in other countries to do the same thing?!". Let's take those 3 very affluent countries and sit them next to the 3 I've had the most experience offshoring to:

![Offshoring roulette: lessons from outsourcing to India, China and the Philippines]()

That's a remarkable difference. Now to be fair, particularly in emerging markets like these 3, the averages are brought down significantly due to large swathes of rural areas and significant portions of the country living in poverty (a quarter of the Filipino population lives below the national poverty line) and the disparity between white-collar roles here and over there is less. In my experience, it was usually more a factor of 4 or 5 times the hourly rate in the expensive markets but the premise that there's a huge wage disparity still holds true. The point is that it makes for a very shiny ROI when put into a management PowerPoint deck.

So that's the basic premise of outsourcing and why organisations like to send the work to other countries. I'll delve deeper into that later on, but let's get onto the three locations in the title first.

India

India has become the canonical offshoring location for organisations all over the world. In fact, I dare say the very name of the country has become synonymous with offshoring and the phrase "Indian Outsourcing" is ingrained into many an IT manager's psyche. There are some good reasons for this:

- The British colonial history means they have very good English skills

- They have a huge population - the world's second largest with more than 1.3B people

- They've invested heavily in business process outsourcing (BPO) and high tech jobs

They also have some seriously big players in the outsourcing game: Tech Mahindra, Infosys and Wipro to name just a few. I've had experience with all of them across many projects as well as with a bunch of smaller players. There were some interesting cultural trends that really stuck out at me and again, these are my observations but they're ones made from many years of experience. (India is also the one country discussed here that I didn't visit personally.)

Many of the developers in India are produced en mass via intensive training programs focusing on a discrete technology. Now of course we have universities and other tertiary avenues available in this part of the world too, but what particularly stuck out at me in India was the niches the developers would fit into. An example of this is Google's recent news about training 2 million Android developers in India - that's a huge number of people to train around a very specific stack. What I'd typically find is people would specialise in one discrete part of a software component and that's all they'd do. Now of course we also have that in Aus or the UK or anywhere else, but we also have the "full stack" developer and they're a much more common beast over here.

The way this would manifest itself in projects is that you'd end up with a lot of people involved. I'd look at estimates for work and quiz the account manager: "Why are there so many roles represented here?" (work would usually be broken down by roles and their costs). There'd always be junior people and senior people and people who specialised in the API and others to do the website and others to do the DB and so on and so forth. Now to be clear, there are projects that require this due to their sheer size but the same pattern would repeat across even small units of work. It would get to the point where I'd joke about the separation of duties extending to the point where someone would write the open tags in a website and someone else would write the close tags! This has a cost that is far more significant than just the obvious people time too, something I'll delve into more a little later.

The other really stark observation about India (far more so than the other countries I'll talk about) is the rate of churn. People were always leaving. We've certainly seen the traditional philosophy of company loyalty eroded here over the years, but over there it was unusual to see someone sticking with the organisation for more than a year or two. I suspect a large part of that was the scramble up the corporate ladder and hopping to a better opportunity somewhere else can accelerate that. And really, who could blame them? But the churn meant for a lot more friction on projects and that's just something that never appears on a line item in a proposal. I'll come back to that later on when I talk about the fungibility of developers.

Another pattern I found time and time again when outsourcing to India was that they'd want really detailed documentation. This is always going to be a contentious issue and there are many different views of how much should be done under what circumstances. But more so in India than the other two locations I'll talk about shortly, detail was important to them. There were many occasions where we would make assumptions that a feature requirement clearly implied certain things only to later discover they was deemed "out of scope". Now that can happen in any project in any part of the world, but it was extremely prevalent in India.

To round out on a strength, what seemed to work best in India was units of work that were discrete in scope (they were fairly fixed), well documented and followed patterns that the vendor was already competent in. I think a lot of it has to do with that "developer factory" mentality where there's a lot of people who are trained in very specific things and if you can task them with those things then that's your "happy path".

China

I spent a lot of time in China and it's a fascinating place for many reasons. For example, you've got 721 million internet users there so we're talking 2.5 times more people connected than in the US. But it's much more significant than that too because almost 90% of the US is already on the web whereas only just over half of China is. But for those who are on the internet, it's not the same web as we know in other parts of the world. Thanks to The Great Firewall there's no Facebook, no Twitter, no YouTube and very onerous laws around personal privacy and anonymity. It means that China has an internet ecosystem all of its own and here's a stark representation of that: India has 462 million internet connected people and is the 6th most represented country on this blog with 4.04% of all visitors coming from there this year. China - with one and a half times as many connected people - is the 49th most represented country here with only 0.21% of my traffic. (Note that these figures are from Google Analytics and could be skewed due to Chinese blocks on Google, but every other metric I have from Cloudflare stats to anecdotal evidence all points to a near non-existent Chinese audience.)

Much of the promise with China is that you've got a huge population of rapidly urbanising people that are getting high quality educations. They're a very tech-savvy nation and we're seeing them go from strength to strength on that front with everything from emerging (and now dominant) hardware providers such as Huawei and Lenovo (my personal favourite for PC gear), to services such as Alibaba which is now well and truly on the world stage. If you're into tech and not watching what's going on in China, you're missing what will probably be the world's most significant source of technology innovation and growth for decades to come.

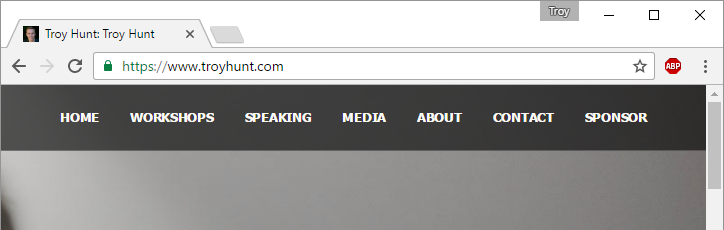

One thing that makes China difficult to work with though is English literacy. They don't have the colonial background that India does and because they're such an insular country so reliant on their own Chinese language services, exposure to English is far more limited and clear communication can be tricky. It means that you're pretty much always going to be at least one layer of indirection away from those actually doing the work; there'll be an account manager with (some) English skills and they'll translate for the tech folks. Now often this would be the case anywhere in the world, but it means that when you really need to have a detail discussion it is literally Chinese whispers and a lot gets lost in translation.

Consider also what this means for code quality. Functionally, the code itself may be just fine but we're talking about people with little or no English language skills writing English syntax (no, in case you're wondering, you don't write HTML tags, JavaScript calls or C# syntax in Chinese)! Consider what that means for the quality objective of self-documenting code:

self-documenting (or self-describing) source code and user interfaces follow naming conventions and structured programming conventions that enable use of the system without prior specific knowledge

In other words, if your code clearly describes what it does then it will be easier to read and easier to maintain, not just for the folks who wrote it, but for those who come along in the future. This is also particularly relevant when we talk about documentation within the code itself or as external artefacts of the project. Now that may not be a problem if forever and a day you commit to that vendor, but if you ever want to take the code back yourself or shuffle it between other cultures then you've got a serious issue. I found it particularly difficult reviewing code written by folks in China for precisely that reason.

Keep in mind that language barriers extend to the UI as well; the same people that may not have much of a grasp on English when they're writing code are also writing labels and headings and all sorts of other externally facing content in the app. That's particularly troublesome in a place like China and it meant for a lot of unexpected (and un-budgeted) revisions, but even in India there are many cultural nuances with the English language. Again, it's the sort of thing that doesn't normally appear as a line-item on a proposal but it definitely has a cost. Browsing through my phone for Beijing pics, this one stuck out at me as it perfectly illustrates the sort of language that led to lots of to-and-fro when outsourcing to China:

![Offshoring roulette: lessons from outsourcing to India, China and the Philippines]()

Coming back to Chinese censorship for a moment, IMHO this still rates as a "high risk" factor for Chinese outsourcing. The risk can manifest itself in various ways ranging from people in China not having access to reference resources (my blog sometimes wasn't accessible because it used to run on Google's Blogger service) to all of GitHub being blocked. The unpredictability of the government's censorship regime is worrying - you should be worried about it if you're doing work in China and yes, many people run VPNs anyway but they're regularly blocked and at best they're "grey" when used to circumvent The Great Firewall so you have to ask yourself: do you really want to be asking your partners there to circumvent government controls in order to deliver work for you?

One of the practices I always pushed all vendors for was proper work breakdown structures in their proposals. The rationale was that if we're going to build an app with, say, 20 features then I want to know how much each one is going to cost. I can then take that breakdown, go back to the business and have a discussion about how important each one is and if there were opportunities to reduce cost by discarding lower value features. It would also allow me to sanity check the proposal and challenge anything I considered to be excessive. But try as I might, in China in particular I just couldn't draw vendors to this way of representing costs. They'd give me breakdowns but it would be how many juniors and seniors and project managers and testers and how much they all cost. To my mind, that was irrelevant because there was nothing actionable I could do with it. But there was just something within the way multiple different vendors across China worked that couldn't get me information in this way and it usually just boiled down to "here's the cost of the whole thing".

However, by having visibility to the man hours for the entire project as well as the total cost, I was able to get much better insight into the effort invested to build the solution. What I consistently found was very high man hours compared to estimates from vendors back home. In fact, I remember one case quite clearly where the project's dollar estimate was identical to a local vendor in Australia yet the man hours were an order of magnitude greater. Yet even then, there was organisational push-back on the local vendor due to their high hourly rate (the rationale for that is best left for when I've completed my post-corporate recovery program...)

Actually, just tangentially whilst I'm on this point, if you're going to offshore the work to somewhere like China then do exactly this: get estimates from local vendors as well, compare the estimated effort and if you see major disparities then push back on the higher numbers. Low hourly rates only make sense when you're comparing a similar number of hours!

Lastly, China is now getting expensive. I wished I could have seen percentage increases in my salary anywhere near the what the guys reporting to me in Beijing were getting, which was well into the double-digit percentages whilst I languished at somewhere around the CPI mark (usually just under 3%). Because of the rapid wage increases in China, according to Glassdoor you're looking at about $25k/y for a software developer in Beijing and only about $7k in Mumbai. That's a very significant difference and it certainly wasn't that multiple when you go back 5 years or more.

On the plus side for China though, one of their strengths (particularly over India) was the ability to get down to work with minimal documentation. Now of course your level of documentation is going to differ by project and indeed by organisation, but in a case like Pfizer's where technology folks were viewed as part of the "burden" they were trying to release, chances are it's not going to be particularly robust. There's a real "can do" attitude in China where they'd pick up anything and have a go at it. This can be risky as well in that my experiences were they'd say "yes" to anything (and that can actually mean "no" in a high-context culture, more on that later), but in general I found them very accommodating which is a big plus.

I'd be more inclined to outsource to China where requirements were more fluid and there was less formality required. The proviso would be that I was happy to stick with the Chinese vendor for a longer period of time as there was a greater friction involved in transitioning projects due to the language barriers.

The Philippines

Had I stayed on at Pfizer, the Philippines is where I would have loved to setup an in-house dev shop (I know, developers are a "burden", but bear with me). I spent a lot of time in Manila and I think I can pretty confidently say that Filipinos are the friendliest bunch of people you'll meet anywhere. Their English language skills are also exceptional (it's one of the two national languages next to Filipino), courtesy of a long history of American settlement. You have zero problems anywhere in the capital being understood and conversing openly and the same goes for discussions with outsourcing vendors.

One thing I've noticed in Australia over recent years is a really clear shift to the Philippines for call centres where previously you'd have been speaking to someone in India. For example, every time I call my telco I end up speaking with a Filipino and I suspect it's a combination of their strong English skills, friendly dispositions and emerging tech sector that's driven the shift. Whilst India has been in the BPO game for many years, the Philippines seems to have become prominent only more recently and that's reflected in the salaries as well (they're much closer to the Indian levels than the Chinese ones).

I mentioned poverty in the Philippines earlier on and it's hard to escape it. You see it everywhere, particularly as you drive through ramshackle housing on the way to the airport.

![Offshoring roulette: lessons from outsourcing to India, China and the Philippines]()

This has an impact on the tech scene as well and one of the starkest patterns I saw outsourcing there was their strong affinity to PHP. This isn't intended to be a derogatory comment about PHP; the LAMP stack is really appealing to folks who don't have much cash. But conversely, the Microsoft stack that I worked in and we were increasingly unifying around in Pfizer was simply much harder to find competence in. You'd go to a vendor and their default position was "Yeah, we can do that in PHP and MySQL". Although we did find vendors that could work with Microsoft's technologies, I always felt it wasn't quite the first-class citizen it was in other countries and that left me just a bit wary about how well-equipped they were to support it.

Cost wise, the Philippines was consistently lower certainly than China and regularly than India too. The economics of each of those countries mean it will likely stay that way for some time too.

In many ways, the Philippines was the best of all worlds; I just mentioned cost then you combined that with the best English skills of the lot, overtly friendly nature and that same "can do" attitude I observed in China and it makes a lot of sense. But that's not to say it's an easy solution and that I'd immediately outsource all the things there either. There are other fundamentals you have to consider about the very foundation the principals of offshoring are built on. Let's take a look at those now.

The economics of offshoring are changing

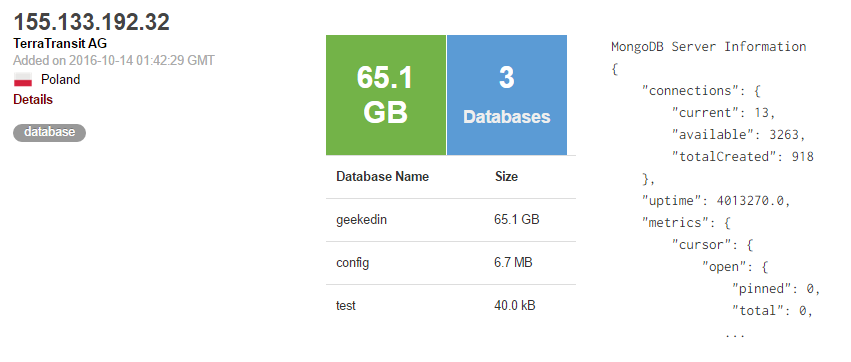

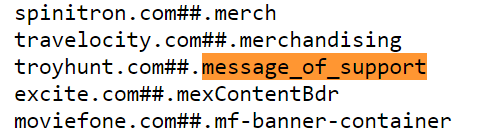

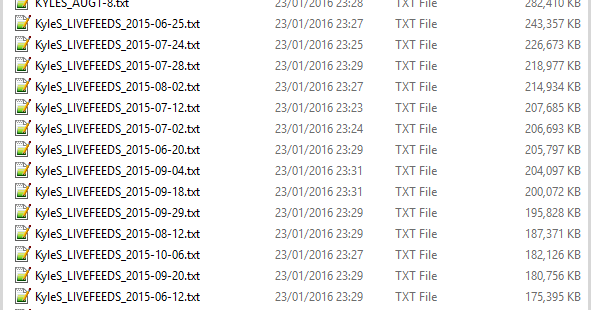

The economics of offshoring are very heavily based on the wage disparities between the country doing the offshoring (for example, the US) and the country being outsourced to (for example, China). But the economics that made sense in the past are very different to the economics today and this picture speaks volumes:

![Offshoring roulette: lessons from outsourcing to India, China and the Philippines]()

This is data from the World Bank and shows that massive disparity in wage growth between the two countries. The gaps are narrower between say, Australia which weathered the GFC exceptionally well due to our resources boom and a country like India which is yet to see the same rate of development as China. But the disparity is still there and the point is simply this: where there once might have been an eleven-fold difference in US and Chinese wages in 2010, it declined to a seven-fold difference in only 5 short years. At what multiplier does it no longer make sense to offshore? Because trend wise, it's just a matter of time until you're at 6 or 5 or who knows where from there.

Now again, we're looking at average salaries across a country like China which still includes many rural areas and the difference is going to be less stark once you're in Beijing or Shanghai, but the trend is the same. Offshoring often means playing a long-game where you really need to commit for many years (I explained some of the reasons why above) and the risk you run is that the economies will change fundamentally in that time.

Vendors don't build software, people build software

One of my personal bugbears is the assertion that a non-sentient being is writing software for you: "We used XYZ vendor on the last project and they were awesome!". What they really mean is "When we used XYZ vendor they had some awesome people doing the work". Now that may sound semantic but the difference is really, really important because people are a very fluid commodity, especially in a place like India.

I was on a project where a developer in Mumbai was doing the lion's share of the work (I'll call her Avni for the sake of example). Anyway, we invested quite a bit of time and money skilling Avni up in a particular technology with which she had familiarity but not the level of strength we would have liked. That all went well until one day... no Avni! With the benefit of hindsight, I suspect she'd headed off to have a baby which in my experience, you normally have a number of months' notice when this is going to happen. However, the message we got at the time was that she was sick but don't worry, here's another Avni for you, just like the last one!

Well before this event happened, I wrote a blog post about The commoditisation of the coder and it stands true to this day. Developers are not a fungible commodity, that is you cannot simply replace one with another identical item and expect them to perform the same. The kid operating the cash register down at your local store - now he's a fungible commodity because if he buggers off you can just drop in another kid and they can almost certainly operate the till with the same level of professionalism.

In Avni's case though, she'd built up knowledge about the project she was working on. She knew why we'd made certain design decisions, she understood the business objectives and how the project owner had moulded the requirements over time. She had the training - not the role - and it walked out the door with her. I've regularly seen assertions from offshore companies that we should be confident in their ability to simply replace a resource as easily as we would a light bulb in the kitchen. This is a dangerously incompetent belief that demonstrates a fundamental misunderstanding of what's actually involved in building software and if you hear it said, you should be asking some pretty serious questions in reply.

You can argue that a professionally run outsourcing company will have processes in place to minimise friction when people leave and equally, you can argue that people leave even permanent positions if they're working onshore within your organisation. All of this is true but it all begs for the question to be asked: when you outsource, are you putting your trust in an organisation that's resilient to people movements, or are you ultimately at the mercy of a few key individuals?

The fallacy of adding more people

One of the problems that plagues non-technical leadership (and there's a lot of them out there in technology leadership positions with genuinely very little idea about technology), is that they view work output as being a linear scale with people resources. In other words, add more people and things will be done faster.

This misunderstanding is exacerbated when offshoring due to the low rates. Your classic pointy-haired boss will look at the people cost and go "Wow, if they're only half the price of the local folks we can get twice as many of them and do the project in half the time"! This fallacy is known as Brook's Law and it's comes from Fred Brook's Mythical Man Month which anyone involved in delivering software should read at least once in their life. One of the guys I used to work with would regularly draw this analogy:

9 women can't have a baby in 1 month

Brooks talks a lot about the cost of connections in his book, namely that the more people you add to a project, the more connections you have. When there are 2 people there is only 1 connection; person A talks to person B. But when there are 3 people the number of connections literally doubles because A talks to B, A talks to C and B talks to C. 4 people is 6 connections, 5 people is 10 and it goes up rapidly from there. What it means is that due to the mechanics of how teams operate, 5 people in India at 20% of the cost is not the same price as 1 person in Australia, far from it.

Adding people costs time:

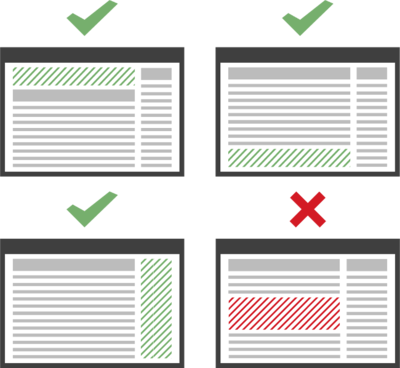

![Offshoring roulette: lessons from outsourcing to India, China and the Philippines]()

This paradox is often neglected and particularly when the people are cheap due to offshoring, the temptation is there to simply load more onto the project.

Code quality

This is a particularly prickly topic and I know there will be many people with many strongly held views on this. My anecdotal experience - and I'll stress that I have no hard metrics to back this up - is that code quality suffers when you outsource from a market like Australia, the US or the UK and you send the work to somewhere low cost. Let me articulate what I believe the drivers for this are:

Firstly, in my experience (there's that caveat again!), there is a much greater desire for the folks building software products in low cost markets to climb the corporate ladder. I mentioned the rate of churn in India as people jump between jobs looking for that management role and certainly I saw the same thing happen over and over again in China and the Philippines too. Smart technical people wanted to "progress" by stopping doing the things they were doing well in order to become managers. Now that happens everywhere, no doubt about it, but whereas here or in the organisations I regularly visit in the US and Europe, there are many people who genuinely want entire careers building products. In the markets I'm discussing here, they almost exclusively want to move to a position where they're instructing others. There just seems to be less interest - less passion - in code and inevitably that's going to impact quality.

Secondly, the outsourcing industry is big business that's always looking to increase output and decrease costs; they are very bottom line focused. I mentioned Tech Mahindra before and they've got well over 100k people on staff there. These companies are ultimately just factories producing a product and they're working ruthlessly to make the process more efficient. They're doing that by having a very large number of very cheap resources and as the saying goes "you get what you pay for".

Finally, the impact of quality is not necessarily clear and present at the time the project is delivered. Often it'll take months or even years to realise the cost of the "technical debt" you've been handed. The Chinese language example earlier on is perfect; after the vendor has built the product and handed it over, what's it going to cost when you want to add features later on and the code is illegible? The longer game in a software product's lifecycle is often neglected as those holding the purse strings rush towards immediate term savings.

Here's a perfect example that embodies these three observations: I never saw a single unit test written for a project. Not one. I would push vendors on what their structured testing process was and it always boiled down to humans manually making sure things work. The developers had usually never even heard of the concept and writing extra code would cost more money, it was that simple in their minds. Of course this led to all the problems you'd expect from not having automated tests which is the third point above: the longer-term maintainability and costs of the project were terrible.

Do not underestimate the cost of cultural nuances

We're all aware that there are many different cultural norms across the globe that are often at odds with each other. In Western society, you'd blow your nose on a handkerchief if it was running yet in Asia this is seen as somewhat gross. Have a bowl of noodles in China and you'll be slurping away at it but that would be uncouth over here. They're minor cultural nuances though, there are far more serious ones that impact cross-cultural teams.

Someone sent me this video recently that was made by a couple of blokes from the subcontinent humorously explaining how to interpret the very Indian trait of the head wobble:

One piece of training I did at Pfizer which was genuinely interesting was around cultural awareness and particularly how many cultures communicate very implicitly. I like the way the video above embodies that; what we interpret as "yes" in Australia can mean "no" in India. It's often referred to as a high-context culture and it means that many things are left unsaid in the direct way that particularly those of us down here communicate. Instead, they're implied and that can lead to serious communication issues.

The problem when you put this in an outsourcing context - particularly when you're remote - is that it's very easy to have misunderstandings. Think about how many times sentiment or emotion is lost in written communication with your own culture and multiply that way out. These can be misunderstandings about costs, inclusions, features, bugs, timelines - you name it. I'd see it happen day in and day out and again, it's something that just doesn't feature as a line item. I mean imagine it:

Cost of cross-cultural teams not understanding each other: $20,000

It never happens! Perhaps it slots into the amorphous blob that is "contingency costs" or something similar, but it's enormously difficult to pin down to a figure.

When outsourcing offshore works

One thing I want to be very clear about here is that I'm not saying sending work overseas doesn't work, far from it. What I am saying is that there are certain challenges that are rarely considered in the ROI and they can have a fundamental impact on the success of outsourcing.

In my post-Pfizer days, I spend a lot of time going into companies around the world and training their developers. When I spend time with teams, the conversation often turns to what work is being done offshore and as much as there's all the sorts of bad stories you've read about here (and possibly experienced yourself), there are also some very positive experiences.

Where things seem to work especially well is when the model is blended; it's not "outsource all the things", it's "let's get our guys working with their guys and all doing what we do best". It sounds profoundly obvious when I put it like that and it genuinely does work well for many orgs. They retain knowledge of the IP within their onshore teams, get those folks facing the customer and doing a lot of the design work then they ship the work which is most suitable for "factory" style development to India.

I would also avoid any models that put dependencies on individuals within the outsourcing org. The Avni example above highlights why this is and you have to except that people turnover is far more likely to happen in an environment like that. Instead, focus on scenarios which lend themselves to greater numbers of people doing more cookie-cutter work then if one or a bunch of them transition out, you're not losing too much project knowledge when it happens.

Often, I've spoken to people that have sent team members over to these far-flung lands so that they could get involved with the offshore people. Not just ingrain them into the organisation's processes, but go and drink beer with them and create a genuine bond. This is a very different attitude to "let's just throw it over the fence and unburden ourselves" and by all accounts, it seems to work well.

And finally, we've got some great tooling these days to make the process of sending work elsewhere more predictable. Enforceable code quality checks, build services, DVCS and all manner of other mechanisms that can remove unpredictability from the process. More than ever, these are essential when you need to build predictability into what can otherwise be a less predictable working model.

Summary

More than anything I've said here to this point, here's what's really important: if you're looking at hourly rate as a metric for outsourcing success, you're doing it very, very wrong!

Outsourcing to other countries is often a decision made by those who have the least understanding of how the mechanics of software development actually work. They're drawn in by the attraction of low rates and their measure of success usually stops at what the immediate term cost is and if the requirements are delivered. It's much harder to factor in the longer terms costs of things like maintainability, availability and of course, security. But when budgets and objectives focus so heavily on the immediate term, is it any wonder that those simultaneously controlling the money whilst not understanding the technology make ill-informed outsourcing decisions?

Cheap offshoring is the McDonalds of the software industry; it's a mass-produced product that whilst highly in demand, isn't very good for you if you do it all the time. Treat it as part of a balanced diet, make informed decisions and don't be blinded by the low sticker price without thinking about the real cost you're going to pay.

This was a really highly-requested blog post that received a lot of positive responses when I recently asked about writing it. It wasn't an easy one to pull together whilst trying to be both honest and culturally sensitive and I do hope I've done it justice. As with all posts, I'd love comments from people that have had their own experiences - both good and bad - and are willing to share them here. Thanks for reading.

![]()